Embracing the Flawed: A Journey from the Sony eReader to the Apple Vision Pro

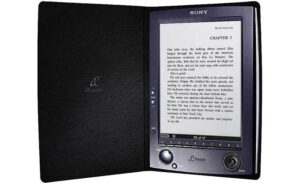

I bought my first e-reader back in September of 2006. The PRS-500, Sony’s first e-reader available in America, cost about $300 and featured digital ink (one of the first to do so), but had no ability on its own to link to the internet to get books. (One had to tether to a computer.) The resolution was okay, not great. I don’t remember exactly how many books it could hold — probably not a lot by today’s standards.

At the time I was aware of discussions about e-readers but hadn’t seen any devices that I felt hit the mark. I knew even before purchase that the PRS-500 would have some significant drawbacks. I mean it was only compatible with PCs! As it turned out, I had a Mac at home and a PC at work, and I remember on more than one occasion forgetting my eReader at home and being frustrated that I’d have to wait at least another full day before I could download a book I wanted. The online store looked to be clunky (and it was), and buying books was cumbersome to say the least.

And yet, even knowing all this, I bought it anyway. BUT WHY? It was pretty simple really. Even though I thought this e-reader would have flaws, I believed it would be sufficiently close to a right solution that it made sense to start reading some books from a screen sooner rather than later. More importantly I hoped that by having some “skin in the game,” that I would recognize the so-called right device when it emerged. The Sony eReader turned out to be perfectly suited to help me achieve these goals — it was good enough that I kept using it over the course of the next year, and in the process I came to have a clear understanding of how these devices worked and what they were good for. When Amazon released the Kindle just over a year later, I knew in an instant that I would like it: platform agnostic, required no tethering, featured a more readable screen, etc.

So how does this relate to the Apple Vision Pro? Is it just an extraordinarily expensive Sony eReader for spatial computing that you should consider buying in order to recognize the “right” device later on down the road? I don’t think so. I think the eReader equivalent was actually the Oculus Rift from several year ago, a forerunner to the Meta Quest 2 (soon to be the Meta Quest 3). The Rift wasn’t (and the Quest isn’t) the ideal headset, despite doing some pretty amazing things. The Quest’s price point is, and will remain, dramatically lower than the Vision Pro will ever be.

If you have told yourself that you might get a Vision Pro next spring, but don’t currently have a Quest, I would encourage you to go get one. You’ll start to appreciate what does and doesn’t work well currently within the world of Spatial Computing. And you’ll hit the ground running with the Vision Pro. Start thinking now with the Quest how you could use it at work, at home, to connect with others. Have fun with it. Explore. That way, when the Vision Pro is ready for launch, you’ll be ready for the Vision Pro.

To read all of Rob’s commentary on Apple Vision Pro click here: Part 1, Part 2, Part 3, Part 4, Part 5, Part 6, Part 8, Part 9, Part 10